Fresh off the presses

(DOI: 10.1371/journal.pone.0152719)

Phillip M. Alday

29 April 2016, Uni Adelaide

Animate and inanimate words chosen as stimulus materials did not differ in word frequency (p > 0.05).

Controls and aphasics did not differ in age (p > 0.05).

(Sassenhagen & Alday, under review at B&L)

Animate and inanimate words chosen as stimulus materials did not differ in word frequency (p > 0.05).

Controls and aphasics did not differ in age (p > 0.05).

we're still answering a boring question:

do these two populations differ systematically in the given feature?

when we actually care about:

is the variance observed in my manipulation (better or at least partially) explained by the differences in the given feature?

(DOI: 10.1371/journal.pone.0152719)

|

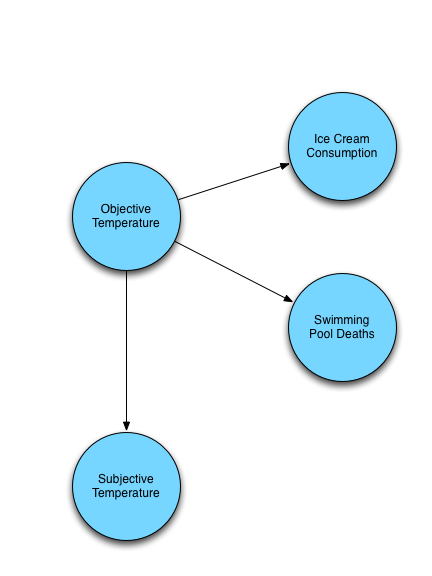

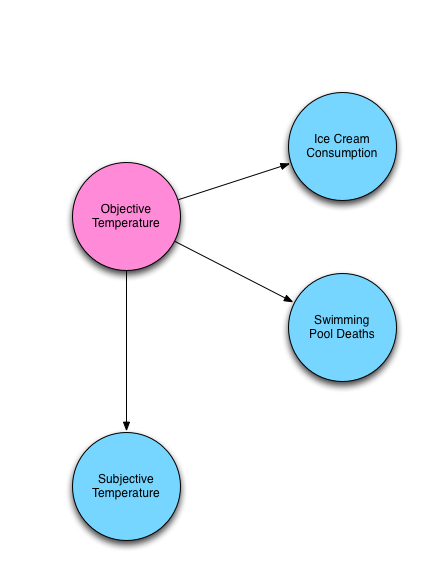

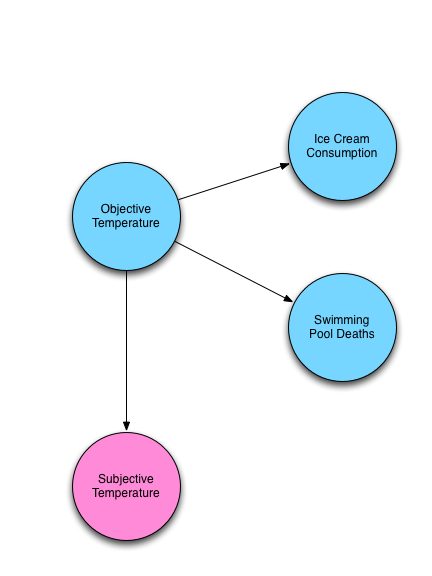

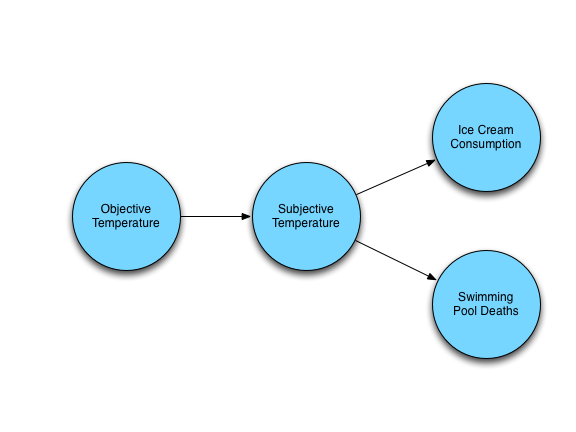

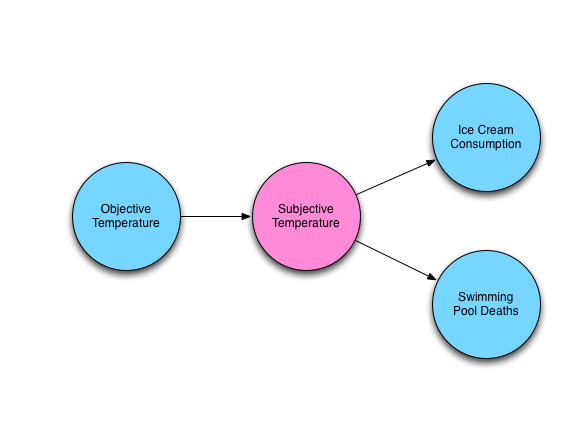

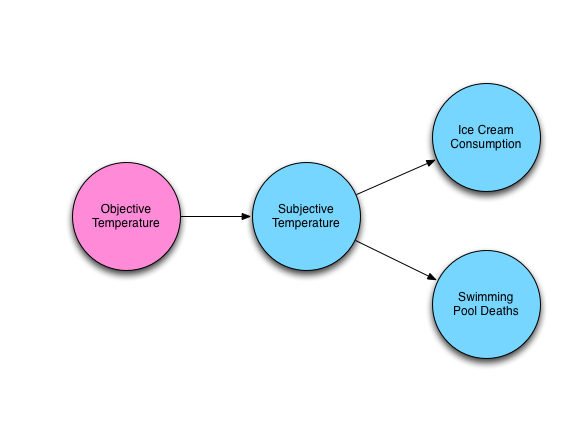

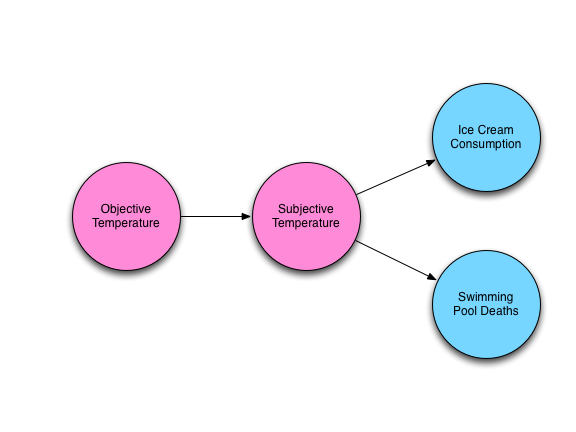

| (All model diagrams from John Myles White) |

|

|

|

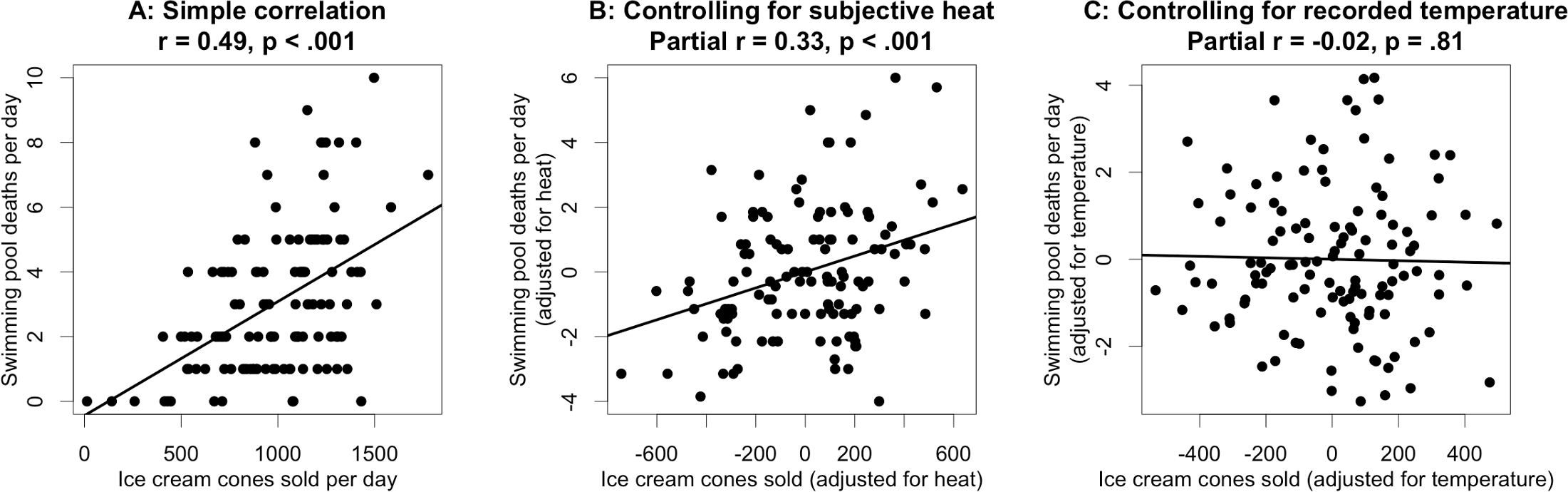

(DOI: 10.1371/journal.pone.0152719.g002)

Y = β0 + β1X1 + ε

ε ∼ N(0, σ)

In other words, we assume:

|

|

|

|

You can find my stuff online: palday.bitbucket.org

I really don't understand it either.

Clark, Herbert H. 1973. “The Language-as-Fixed-Effect Fallacy: A Critique of Language Statistics in Psychological Research.” Journal of Verbal Learning and Verbal Behavior 12: 335–59. doi:10.1016/S0022-5371(73)80014-3.

Judd, Charles M., Jacob Westfall, and David A. Kenny. 2012. “Treating Stimuli as a Random Factor in Social Psychology: A New and Comprehensive Solution to a Pervasive but Largely Ignored Problem.” J Pers Soc Psychol 103 (1): 54–69. doi:10.1037/a0028347.

Westfall, Jacob, and Tal Yarkoni. 2016. “Statistically Controlling for Confounding Constructs Is Harder Than You Think.” PLoS ONE 11 (3). Public Library of Science: 1–22. doi:10.1371/journal.pone.0152719.

Westfall, Jacob, David A. Kenny, and Charles M. Judd. 2014. “Statistical Power and Optimal Design in Experiments in Which Samples of Participants Respond to Samples of Stimuli.” Journal of Experimental Psychology 143 (5): 2030–45. doi:10.1037/xge0000014.